Power BI offers the ability for users who have access to a dataset in the Power BI service (PowerBI.com) to connect to the dataset using Excel. Normally, this feature is referred to as Analyze in Excel. Once you connect Excel to your dataset, you can create Pivot Table reports or use Cube Functions.

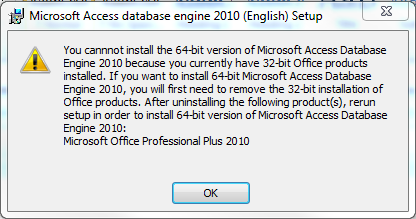

There are currently limitations that mean this functionality isn’t supported for B2B (external) users. An external user is an Azure AD user that is based in another tenant and has been guested into the local AAD tenant. If you go to your dataset in PowerBI.com. choose Analyze in Excel, and then try to open the downloaded file and connect to the dataset, you will be met with connection errors.

But if you have your dataset in a workspace backed by Premium Per User or Premium capacity, you can use the XMLA endpoint to connect, even if you are using a B2B user!

Instead of using the Analyze in Excel functionality, you can connect to your dataset as if it were Analysis Services, using the XMLA endpoint. B2B users just need to make one adjustment to the server name they enter to make this work.

In Excel, locate the Get Data button. Select From Database and then From Analysis Services.

Open a browser window and go to the settings of the Power BI workspace that contains the dataset to which you want to connect.

If your workspace is backed by Premium capacity, you will be able to see this in the settings and the workspace connection will be available for you to copy. If you are a member user (not external) you could copy this info into the Server Name box of the Data Connection Wizard and go on your way.

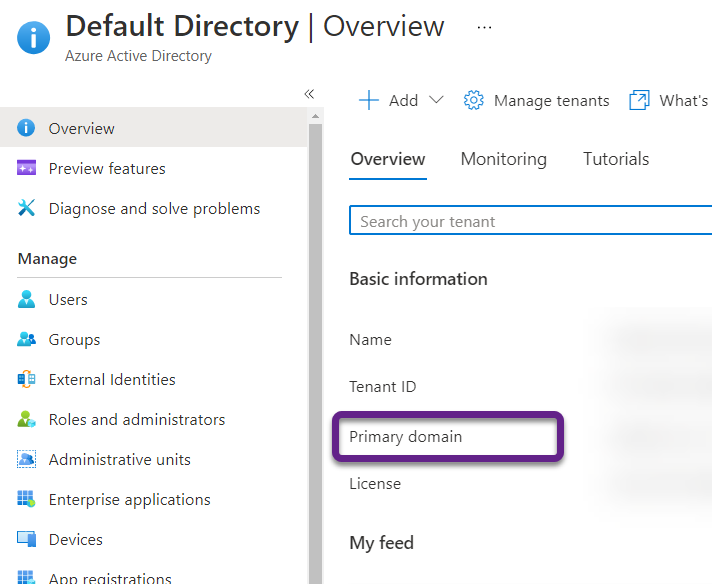

If you are a B2B user, you need to make an adjustment as noted in Microsoft Docs. You need to replace “myorg” in the workspace connection with your primary domain name. If you have access to the Azure portal, you can find the primary domain name on the overview page for the Azure Active Directory.

So if the workspace connection from the Power BI service is: powerbi://api.powerbi.com/v1.0/myorg/Demo%20Reports

And your primary domain is: mysupercooldomain.com

Then you would change the workspace connection to:

powerbi://api.powerbi.com/v1.0/mysupercooldomain.com/Demo%20Reports

Once you have populated the server name with the workspace connection string, change the logon credentials to “Use the following user name and password” but leave the credentials blank. Once you select the Next button, you will be prompted for your Azure credentials.

Then you will be able to select the desired dataset from the workspace and be on your way to making connected Excel reports.