I recently worked on a project where a client wanted to use Biml to create SSIS packages to stage data from Dynamics 365 CRM. My first attempt using a script component had an error, which I think is related to a bug in the Biml engine with how it currently generates script components, so I had to find a different way to accomplish my goal. (If you have run into this issue with Biml, please comment so I know it’s not just me! I have yet to get Varigence to confirm it.) This client owned the Pragmatic Works Task Factory, so we used the Dynamics CRM source to retrieve data.

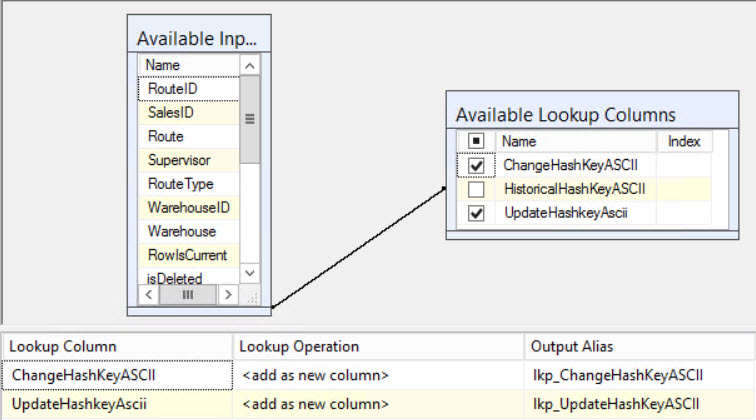

I was ultimately creating a bunch of packages that looked like the below.

There are two pieces to using the Task Factory Dynamics CRM Source: the source component and the connection manager. The code for both is below.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| <!– This goes in your data flow as the source –> | |

| <CustomComponent Name="TF Dynamics 365 <#=table.Name#>" ComponentTypeName="PragmaticWorks.TaskFactory.DynamicsSource" Version="1" ContactInfo="Dynamics Source;Pragmatic Works, Inc; Task Factory (c) 2009 – 2016 Pragmatic Works, Inc; http://www.pragmaticworks.com;support@pragmaticworks.com" UsesDispositions="true"> | |

| <Annotations> | |

| <Annotation AnnotationType="Description">Extract data from Microsoft Dynamics CRM.</Annotation> | |

| </Annotations> | |

| <DataflowOverrides> | |

| <OutputPath OutputPathName="Dynamics Source Output"> | |

| <!– Iterate through the columns (I'm passing in table and column from another file), ignoring any audit columns you may have added to your table. Set the Error Row Disposition and Truncation Row Disposition. –> | |

| <# foreach (var column in table.Columns.Where(c => !c.Name.Equals("AuditETLLogID") && !c.Name.Equals("AuditInsertDate"))) { #> | |

| <Column ErrorRowDisposition="NotUsed" TruncationRowDisposition="NotUsed" ColumnName="<#=column.Name#>" /> | |

| <# } #> | |

| </Columns> | |

| </OutputPath> | |

| </DataflowOverrides> | |

| <CustomProperties> | |

| <CustomProperty Name="DebugMode" DataType="Boolean">false</CustomProperty> | |

| <CustomProperty Name="FilterQuery" DataType="String"></CustomProperty> | |

| <CustomProperty Name="BatchSize" DataType="Int32" Description="The maximum number of records to retrieve per round trip to the dynamics server">500</CustomProperty> | |

| <CustomProperty Name="FetchMode" DataType="Int32" TypeConverter="PragmaticWorks.TaskFactory.Components.Sources.Dynamics.DynamicsSource+FetchModeEnum, PragmaticWorks.TaskFactory.Components130, Version=1.0.0.0, Culture=neutral, PublicKeyToken=47acf905d0337c39" Description="How will data be retrieved from the entity. Name or Xml Query?">0</CustomProperty> | |

| <CustomProperty Name="Entity" DataType="String" SupportsExpression="true" Description="Name of the entity to retrieve data from"><#=table.Name#></CustomProperty> | |

| <CustomProperty Name="QueryXML" DataType="String" SupportsExpression="true" Description="The XML Query"><filter type="and"></filter></CustomProperty> | |

| <CustomProperty Name="Get Changes" DataType="Boolean" SupportsExpression="true" Description="Get the changes for an entity">false</CustomProperty> | |

| <CustomProperty Name="Changes Token Variable" DataType="String" SupportsExpression="true" Description="The variable that will contain the tracking changes token."></CustomProperty> | |

| </CustomProperties> | |

| <OutputPaths> | |

| <OutputPath Name="Dynamics Source Output"> | |

| <OutputColumns> | |

| <!– Add your columns here –> | |

| <# foreach (var column in table.Columns.Where(c => !c.Name.Equals("AuditETLLogID") && !c.Name.Equals("AuditInsertDate"))) { #> | |

| <# if (column.DataType == System.Data.DbType.Int32) { #> | |

| <OutputColumn | |

| Name="<#=column.Name#>" | |

| ExternalMetadataColumnName="<#=column.Name#>" | |

| ErrorOrTruncationOperation="Conversion" | |

| ErrorRowDisposition="FailComponent" | |

| TruncationRowDisposition="FailComponent" /> | |

| <# } else if (column.DataType == System.Data.DbType.String) { #> | |

| <OutputColumn | |

| Name="<#=column.Name#>" | |

| Length="<#=column.Length#>" | |

| DataType="<#=column.DataType#>" | |

| ExternalMetadataColumnName="<#=column.Name#>" | |

| ErrorOrTruncationOperation="Conversion" | |

| ErrorRowDisposition="FailComponent" | |

| TruncationRowDisposition="FailComponent" /> | |

| <# } else if (column.DataType == System.Data.DbType.Guid) { #> | |

| <OutputColumn | |

| Name="<#=column.Name#>" | |

| DataType="Guid" | |

| ExternalMetadataColumnName="<#=column.Name#>" | |

| ErrorOrTruncationOperation="Conversion" | |

| ErrorRowDisposition="FailComponent" | |

| TruncationRowDisposition="FailComponent" /> | |

| <# } else if (column.DataType == System.Data.DbType.Double) { #> | |

| <OutputColumn | |

| Name="<#=column.Name#>" | |

| DataType="Double" | |

| ExternalMetadataColumnName="<#=column.Name#>" | |

| ErrorOrTruncationOperation="Conversion" | |

| ErrorRowDisposition="FailComponent" | |

| TruncationRowDisposition="FailComponent" /> | |

| <# } else if (column.DataType == System.Data.DbType.Currency) { #> | |

| <OutputColumn | |

| Name="<#=column.Name#>" | |

| DataType="Currency" | |

| ExternalMetadataColumnName="<#=column.Name#>" | |

| ErrorOrTruncationOperation="Conversion" | |

| ErrorRowDisposition="FailComponent" | |

| TruncationRowDisposition="FailComponent" /> | |

| <# } else if (column.DataType == System.Data.DbType.Boolean) { #> | |

| <OutputColumn | |

| Name="<#=column.Name#>" | |

| DataType="Boolean" | |

| ExternalMetadataColumnName="<#=column.Name#>" | |

| ErrorOrTruncationOperation="Conversion" | |

| ErrorRowDisposition="FailComponent" | |

| TruncationRowDisposition="FailComponent" /> | |

| <# } else if (column.DataType == System.Data.DbType.DateTime) { #> | |

| <OutputColumn | |

| Name="<#=column.Name#>" | |

| DataType="DateTime" | |

| ExternalMetadataColumnName="<#=column.Name#>" | |

| ErrorOrTruncationOperation="Conversion" | |

| ErrorRowDisposition="FailComponent" | |

| TruncationRowDisposition="FailComponent" /> | |

| <# } } #> | |

| </OutputColumns> | |

| <ExternalColumns> | |

| <!– Add your columns here –> | |

| <# foreach (var column in table.Columns.Where(c => !c.Name.Equals("AuditETLLogID") && !c.Name.Equals("AuditInsertDate"))) { #> | |

| <# if (column.DataType == System.Data.DbType.Int32) { #> | |

| <ExternalColumn | |

| Name="<#=column.Name#>" /> | |

| <# } else if (column.DataType == System.Data.DbType.String) { #> | |

| <ExternalColumn | |

| Name="<#=column.Name#>" | |

| Length="<#=column.Length#>" | |

| DataType="String" /> | |

| <# } else if (column.DataType == System.Data.DbType.Guid) { #> | |

| <ExternalColumn | |

| Name="<#=column.Name#>" | |

| DataType="Guid" /> | |

| <# } else if (column.DataType == System.Data.DbType.Double) { #> | |

| <ExternalColumn | |

| Name="<#=column.Name#>" | |

| DataType="Double" /> | |

| <# } else if (column.DataType == System.Data.DbType.Currency) { #> | |

| <ExternalColumn | |

| Name="<#=column.Name#>" | |

| DataType="Currency" /> | |

| <# } else if (column.DataType == System.Data.DbType.Boolean) { #> | |

| <ExternalColumn | |

| Name="<#=column.Name#>" | |

| DataType="Boolean" /> | |

| <# } else if (column.DataType == System.Data.DbType.DateTime) { #> | |

| <ExternalColumn | |

| Name="<#=column.Name#>" | |

| DataType="DateTime" /> | |

| <# } } #> | |

| </ExternalColumns> | |

| </OutputPath> | |

| </OutputPaths> | |

| <Connections> | |

| <!– Do not change Name of DYNAMICSCONNECTION –> | |

| <Connection Name="DYNAMICSCONNECTION" ConnectionName="TF_DynamicsCRM" /> | |

| </Connections> | |

| </CustomComponent> | |

| <!– This goes in your environments file or elsewhere, just including to keep this with the source component –> | |

| <CustomSsisConnection Name="TF_DynamicsCRM" CreateInProject="true" CreationName="DynamicsCrmConnectionManager" ObjectData="<DynamicsConnectionManager | |

| ConnectionString="UseProxy=False;UseConnectionSharing=False;ServerType=3;CRMVersion=4;OrganizationName=MyOrgName;ProxyHost=;ProxyPort=0;ProxyUser=;ReuseSameConnection=False;ServerHost=disco.crm.dynamics.com;ServerTimeout=60;ServerUser=Myuser@domain.com;"> | |

| <ServerPassword | |

| Sensitive="1" xmlns="www.microsoft.com/SqlServer/Dts" | |

| p4:Salt="mOECnMOaUg==" | |

| p4:IV="Y91tUjUbZZk=" | |

| xmlns:p4="www.microsoft.com/SqlServer/SSIS">DxApS7rkG1qIrWFNXW7lVxhP1NCf5ERQLLuBUoIgh+0Qq2h3j8EltnVddZLUKDcYTisgAp5dUICR827d5VaHqsn2Q2SbWB2Q2XVL5pzd38/E4j+vds1beozDzD10OLdXxql11vCvEE0=</ServerPassword> | |

| </DynamicsConnectionManager>"/> | |

Things to note for the source component:

- This is pulling data for an entity in Dynamics 365. If you were to populate this by hand, the Task Factory UI would ask you which entity. There are several entities that are visible, but do not allow you to retrieve the data. You can find a list of internal entities here. You won’t want to stage data from those tables. I generated all of my packages at once with Biml, using a Blacklist to exclude the internal entities.

- The entity name that should be the value on line 21 is the entity logical name.

- I’m iterating through columns in tables in a separate file and holding them in memory, hence the references to Table.columns. You’ll need to write some code to get the entity metadata to feed that, or find another way to provide the table names and column names and data types.

- You must set the ErrorRowDisposition and TruncateRowDisposition to “NotUsed” as I did on line 10 for each column or your package will not work.

- In OutputColumns and ExternalColumns collections, I just have if statements to check data types. There may be a more elegant way to do this, but this worked for me and I went with it. Feel free to leave suggestions in the comments if you have a better idea.

- The Connection element on line 145 should keep the name “DYNAMICSCONNECTION”. If you change it, the connection won’t work. In my actual project, my connection manager is in a separate file and is named “TF_DynamicsCRM”. You can set the ConnectionName property to whatever you want, as long as it matches your connection definition (Line 153 in the gist).

Things to note for the Connection Manager:

- I tried reverse engineering the connection manager in Biml Studio, and that got me close, but it didn’t get the ObjectData property quite right. I ended up having to create one manually, view the code for it in SSDT, and copy it into my Biml file. I have my packages and project set to EncryptSensitiveWithPassword, which is why you see the p4:Salt value on line 158. Your connection manager will have different values for lines 158, 159, and 160. If you set your project to EncryptSensitiveWithPassword, this value will stay consistent across developers. But if you use EncryptSensitiveWithUserKey, the value may change, which will be fun for regenerating with Biml. So make sure you plan for that if you have multiple developers or multiple computers on which you are working.

- This connection manager is set to connect to Dynamics 365, hence the ServerHost=disco.crm.dynamics.com. If you have an on-prem or hosted environment that isn’t 365, you’ll have to find the correct ServerHost value and put it in line 165.

I hope that helps someone looking to generate the Task Factory Dynamics Source with Biml.