We can now pass dynamic values to linked services at run time in Data Factory. This enables us to do things like connecting to different databases on the same server using one linked service. Some linked services in Azure Data Factory can be parameterized through the UI. Others require that you modify the JSON to achieve your goal.

Recently, I needed to parameterize a Data Factory linked service pointing to a REST API. At this time, REST APIs require you to modify the JSON yourself.

In order to pass dynamic values to a linked service, we need to parameterize the linked service, the dataset, and the activity.

I have a pipeline where I log the pipeline start to a database with a stored procedure, lookup a username in Key Vault, copy data from a REST API to data lake storage, and log the end of the pipeline with a stored procedure. My username and password are stored in separate secrets in Key Vault, so I had to do a lookup with a web activity to get the username. The password is retrieved using Key Vault inside the linked service. Data Factory doesn’t currently support retrieving the username from Key Vault so I had to roll my own Key Vault lookup there.

I have parameterized my linked service that points to the source of the data I am copying. My linked service has 3 parameters: BaseUrl, Username, and SecretName. The JSON for my linked service is below. You can see that I need to reference the parameter as the value for the appropriate property and also define the parameter at the bottom.

{

"name": "LS_RESTSourceParam",

"properties": {

"annotations": [],

"type": "RestService",

"typeProperties": {

"url": "@{linkedService().BaseUrl}",

"enableServerCertificateValidation": true,

"authenticationType": "Basic",

"userName": "@{linkedService().Username}",

"password": {

"type": "AzureKeyVaultSecret",

"store": {

"referenceName": "MyKeyVault",

"type": "LinkedServiceReference"

},

"secretName": "@{linkedService().SecretName}"

}

},

"parameters": {

"Username": {

"type": "String"

},

"SecretName": {

"type": "String"

},

"BaseUrl": {

"type": "String"

}

}

}

}

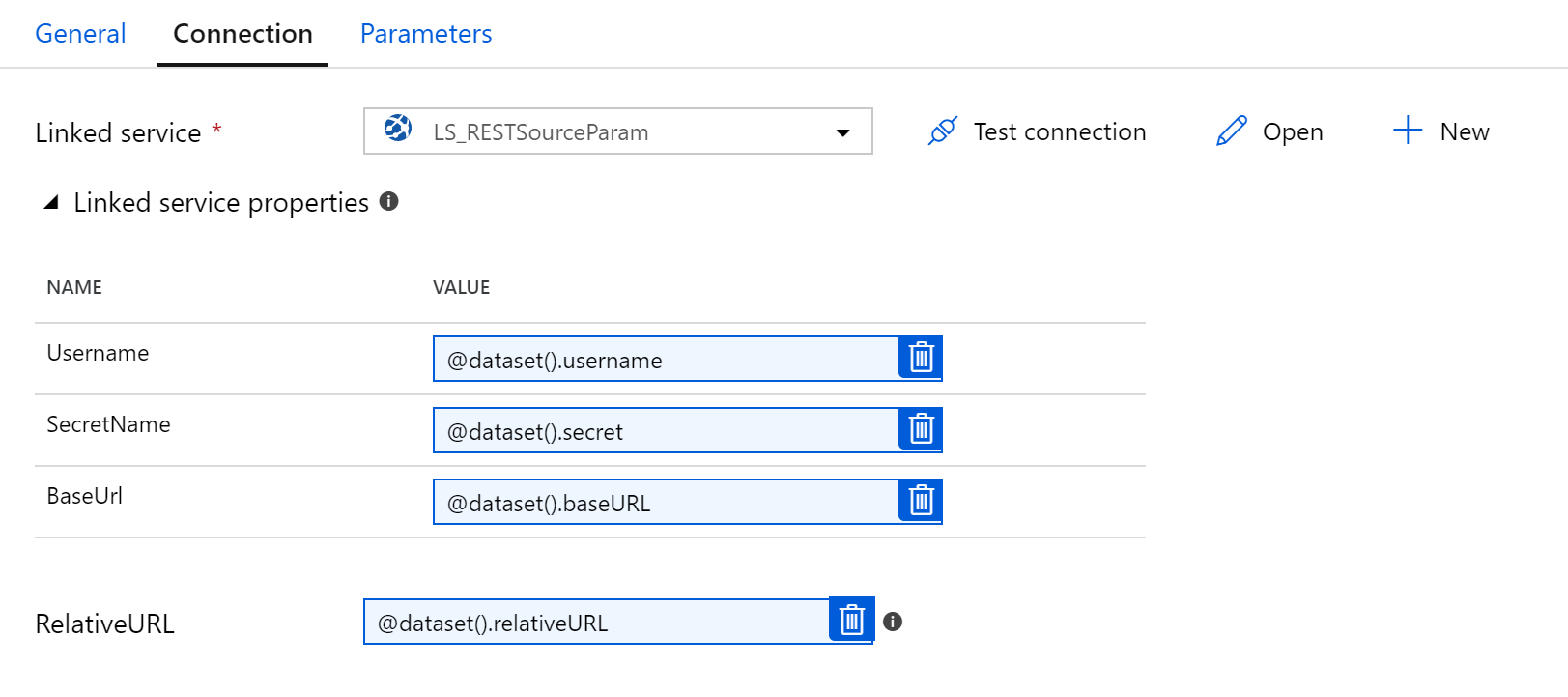

I have defined these three parameters in my dataset, along with one more parameter that is specific to the dataset (that doesn’t get passed to the linked service). I don’t need to set the default value on the Parameters tab of the dataset.

On the Connection tab of the dataset, I set the value as shown below. We can see that Data Factory recognizes that I have 3 parameters on the linked service being used. The relativeURL is only used in the dataset and is not used in the linked service. The value of each of these properties must match the parameter name on the Parameters tab of the dataset.

In my copy activity, I can see my 4 dataset parameters on the Source tab. There, I can write expressions to provide the values that should be passed through to the dataset, 3 of which are passed through to the linked service. In my case, this is a child pipeline that is called from a parent pipeline that passes in some values through pipeline parameters which are used in the expressions in the copy activity source.

And that’s it. I can run my pipeline and have it call different REST APIs using one linked service and one dataset.

Great article! Thanks for sharing Meagan! I had not realized we could access the Azure Secrets Manager this way. This can be very useful to keep secrets secure!

Thanks Meagan, I am truly stumped, I have followed your example and I cannot manage to get the linked service properties to display when setting up the data set.

Any thoughts

Sorry, without seeing your code, I don’t have any suggestions. I would start by validating that your linked service works. Test it and fill in the parameterized values. Make sure you have the parameters collection defined in your linked service as well as the reference to the parameter in the typeProperties.

Hi Meagan, crazy thing is that it is working now, i guess if you keep doing the same thing over and over again sometimes the result is different

Hi Meghan, just curious, what is the dataset type. I have a scenario where I need to store some URLs in keyvault as config and retrieve the same to use in subsequent web activity URL field.

There isn’t a dataset associated with the web activity that retrieves data from Key Vault. You just reference the output. There are instructions in MS Docs here https://docs.microsoft.com/en-us/azure/data-factory/how-to-use-azure-key-vault-secrets-pipeline-activities

Hi thanks much for the article. I am trying to call a REST api in a forEach loop passing the id to be sent over the relativeUrl dynamically. The id comes from the iteration as @item().id. After the execution of the pipeline, I would like to view which Copy activity(REST call) listed in the output of the pipeline used which item().id. When I view the “Input” and “Output” of the Copy activities, I do not see it. Is there a way I can inspect the value of the id that was used to construct the relativeUrl of the REST call after the execution? This will help me debug when the REST call comes back with an error. Appreciate your suggestions! Thank you.

Hi, Jay. In my typical framework, I have a parent pipeline with a Lookup and ForEach and then a child pipeline that gets called in the For Each that has the actual copy activity with the REST source. Then I pass all the info to the child pipeline via parameters and it shows up in the monitoring. Hope that helps.

I log additional data to a small database or blob storage, so I can use it for incremental loads and auditing.

Hi Meagan, thanks so much for the speedy response! Appreciate it. Since I have thousands of requests to make in the loop, I am not able to use a sub-pipeline for each call. But I did see an option to set ‘User Properties’ in the activity where I could set the value of the item().id. This shows up against each iteration that is listed in the monitoring. So that works! 🙂

I would also like to take your suggestions if you have any to handle rate limits on API calls. I am calling an API in a loop for a large number of ids. I set my forEach activity to run in parallel. Is there a good way to throttle and control the rate at which the copy activity (REST call) is executed in the loop?

The copy activity calls the REST api (source) and writes the response JSON file in the blob storage (Sink). This currently takes a comfortable amount of time for each iteration that I have not hit the rate limit in my testing. But I would like to know if there is a correct way to set the rate limit for such API calls running in a loop on the activities in the Pipeline.

Thank you so much for your insights!

Sorry, I don’t have much experience dealing with rate limits. The one thing I have done is to create a failure path if the copy activity fails and then add a wait activity for 30 seconds or 1 minute and have it try again when I hit concurrency limits. But if you are maxing out on the limit per hour or day, that wouldn’t help.

Thanks Meagan ! A very nicely explained article. I have a similar requirement to my project. I am able to pass the secret value (API token) from the Key Vault. Regarding the base URL value, may I know, how are you passing it to the baseURL parameter defined in the dataset ? Is it just a hardcoded value ?

Regards,

Mahesh

In my project, most values including the base url are stored in a database table, and I retrieve them using a Lookup activity. I iterate through the values using a For each loop and pass the values to a child pipeline via parameters.

Thanks for the reply !

This help me so much! thank you!! do you have an example of how to do the same but receiving a token?

I mean you sent a request and then it returns a token and with that token you can request the information.

Perhaps this post (or Dave’s post that I link to in there) will help: https://datasavvy.me/2020/07/09/refreshing-a-power-bi-dataset-in-azure-data-factory/

It gets an AAD auth token and then uses it in the next step.

Hi Meagan,

Thank you for this article, it has been helpful. I have one question. This works fine for us when we use in our dev environment, but when we use Azure DevOps to promote to QA and Prod, we are losing this portion of the json:

“password”: {

“type”: “AzureKeyVaultSecret”,

“store”: {

“referenceName”: “MyKeyVault”,

“type”: “LinkedServiceReference”

},

“secretName”: “@{linkedService().SecretName}”

}

Have you heard of this issue?

Thanks.

Sorry, I have not heard of that. I would log a support ticket or feedback item. I have found the ADF team to be very responsive and quick to help when I logged issues.

Thank you.

Thank you!!

I am using the Dataflow Source component instead of the pipeliine Copy component. When I select either inline or dataset for the sourcetype and select a dataset or a linked service, the parameters for that dataset do not show to set them like they do in the pipeline copy component. The params for the dataset also are not available to set in the “ExternalCall” component after selecting the linked service. How do you make these show or set them in the dataflow?

Sorry, I don’t do much with data flows. Perhaps this might help? https://learn.microsoft.com/en-us/answers/questions/100077/how-can-i-pass-parameters-to-sql-datasets-in-adf-m.